Move and This Interface Will Adjust Its 3D Form Accordingly

MIT students have developed a motion-based interface that allow users from afar to build formations in real time

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer/20131122122105inFORM-web.jpg)

In a world where we’re being conditioned to touch screens, a team of MIT researchers is trying to get consumers to, ironically, think different. Imagine a computing system where users located in one location could gesture and these motions would generate various designs, shapes and messages in physical form in a completely different location. It would almost be like reaching into a screen and touching what you see on the other side.

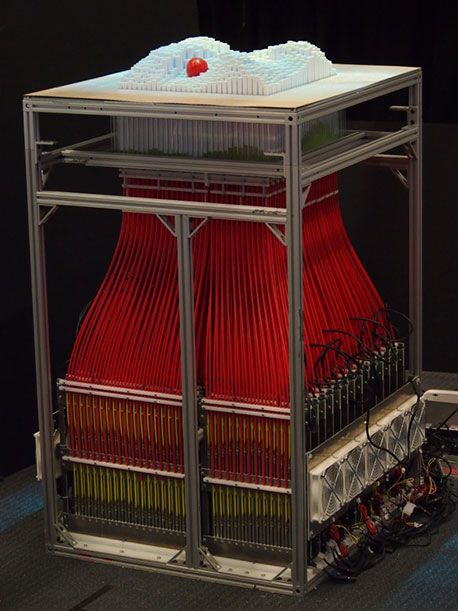

Dubbed inFORM, the interface is comprised of 900 motorized rectangular pegs that can be manipulated using a kinetic-based motion sensor, like Microsoft Kinect. In the demonstration video, you can see how the pegs systematically rise up and take the form of a pair of fabricated hands to play with toys, like a ball, or page through a book. Much like those pinscreen animation office toys, with inFORM, entire physical representations of towns and landscapes can instantly emerge and evolve before your eyes.

“We’re just happy getting people to think about interfacing using their sense of touch in addition to touch screens, which are nothing but pixels and purely visual information,” says Leithinger. “You can now see it can be a lot more than that.”

Envisioned as a kind of “digital clay,” the PhD students originally developed the technology for practical applications, such as architectural modeling. While 3D printers can produce miniature replicas that take as long 10 hours to fully layer and dry, inFORM’s moldable flatbed can instantly model entire urban layouts and modify them on the fly. Geographers and urban planners could similarly produce maps and terrain models. There are potential uses in the medical field as well. A doctor, for instance, might review a 3D version of a CT scan with a patient.

The elaborate system is designed so that each peg is connected to a motor controlled by a laptop. But, the inFORM technology isn’t meant to be a consumer product—not yet at least. “What you’re seeing is the early stages of a completely different kind of technology,” says Leithinger. “So the way we put this interface together wouldn’t be cost-effective enough for the mass market, but there are lessons that can be learned to make something based on the idea of 3D interfacing.”

The creators also don’t want anyone to confuse inFORM with a similar nascent technology called telepresence, where a person’s movements can be transmitted remotely to a different location. Even though telepresence robots like the popular prototype Monty can be controlled from afar to pick up objects, they’re limited to limb movements and other attributes of the human form.

“Our system allows for a lot more improv than these other technologies, like generating an object that interacts with another in real time” says Follmer. “A telepresence robot may be able to pick up a ball, but it’s not as good at using a bucket to pick up a ball.”

As the pair explores the technology’s wide range of potential applications, they’re also aware of the current limitations. For now, the inForm interfacing only works as a one-way system, meaning two people in separate continents won’t be able to use their own 3D surfaces to simultaneously hold hands. It also can’t create complex overhangs where a portion of the formation juts out horizontally (think: the diagram in the game Hangman). For that, you’ll still need a 3D printer.

“It’s possible to make the interactivity touchable and real on both ends and so we’re definitely exploring going in that direction,” says Leithinger “We’re constantly getting emails from people telling us how the interface can be used to help blind people communicate better or for musicians, stuff even we’ve never thought about.”

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/tuan-nguyen.jpg)

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/accounts/headshot/tuan-nguyen.jpg)